Comprehensive MEAN(Mongo, Express, Angular, and Nodejs) with Docker I

Sometimes, I would like to learn something comprehensively. Not only how to do this, but also how people usually do it and what tool do people use. So here comes this post. This contains both MEAN stack and tools people usually use.

I will introduce them in four parts.

- *Environment setting - develop MEAN with docker.

- Nodejs and Express.

- Angularjs.

- Continuous Development.

Project Architecture

/app

|---/pages

|---index.html

|---index.js

|---/about

| |---memoAbout.html

|---/memo

|---memoController.js

|---memoPage.html

server.js

Dockerfile

docker-compose.yml

package.json

bower.json

Gulpfile.js

Why Docker

There are several reason why I choose docker as my development environment.

- Team member can easily share code no matter what is their environment setting.

- Highly portable, you can run your app in anywhere with docker installed. Also, there are many docker solution in server like Kubernetes, ECS.

- Especially for this MEAN project. Install MongoDB in local is painful and so does on server. While you don't want to find online MongoDB host, you can easily get MongoDB from docker with one commend.

Install and Start Docker

Refer to docker's doc - install to see how to install.

After install you should able to run docker commend.

Now you need a docker-machine to run your app.

Refer to docker's doc - start docker-machine.

Finish previous two steps, you should able to see your docker-machine running by run docker-machine ls.

Dockerfile

Dockerfile is what you want to setup your docker container. Below is my Dockerfile for this MEAN project.

#Retrieve a node server. Its OS should be ubuntu.

FROM node

#Add my app folder to my node server

ADD . /app

WORKDIR /app

RUN npm install

#expose a port to allow external access

EXPOSE 8080

#Start my node application

CMD ["node", "server.js"]

Having this Dockerfile, you can easily share your environment with your team members or build your image and run it anywhere with docker.

docker-compose.yml

Also, you can organize your project with docker-compose file. By doing so, you can run multiple container in one commend and save a lot of time with docker run commend.

version: '2'

services:

memo:

#looking Dockerfile in current folder level

build: .

ports:

- "8080:8080"

depends_on:

- mongo

container_name: memo

image: memo

mongo:

image: mongo

container_name: memo-mongo

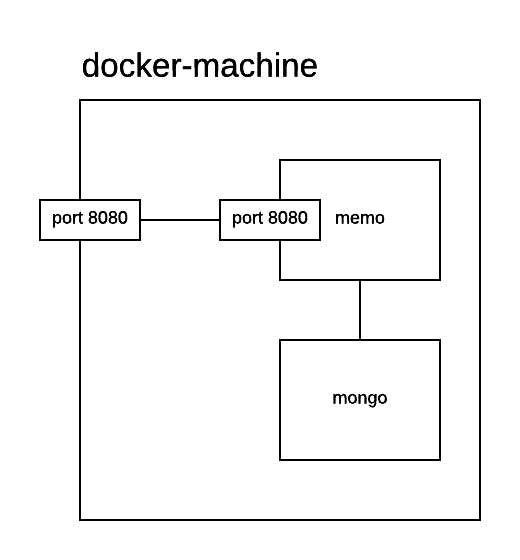

Let's talk about this docker-compose file. Now we have two services. One is memo which is our todolist app, another is mongo which is our MongoDB. Also, memo open port 8080 mapping. Your docker machine will looks like below.

Container linkage

Why we want to link two container? By link two container, we don't need to hard code container's ip address and it also means you don't even need to know their ip address while that might be changed sometimes.

You might also notice that container memo is linked with container mongo while it seems I didn't do anything.

There are two mechanism in docker to link two container.

- Using the docker-compose.yml version 2 like me. in version 2, it pull all service in same network into hosts file. So you can just use service name.

--link, you can use it likedocker run --link <name or id>:aliasor if you use docker-compose.yml with version 1, you can have link section like this

links:

- db

- db:database

- redis

This method will put the liked service ip address into environment variable. You can see their doc here. For example, if your are using node application, you can retrieve ip address by process.env.DB_PORT_5432_TCP_ADDR

depend_on

depends_on:

- mongo

This will indicate memo is depend on mongo service. So container memo will not start until container mongo finished.

Noted: While I said container mongo finished, it only means container finished start process, not include service initialized. So you might find a problem that your app is ready but your database is not finished initialized even if your app depend on your database. You could retry connection after connection failed.

Commend

Instead of use docker run for each container. We can use docker-compose up -d to start all the container all at once. You should able to access your container in http://your-docker-machine-ip:8080.

If you want to stop all your container, you can run docker-compose down --rmi all to stop, remove containers and images.

Further Reading

You can find this code in my github repo MEAN pracitce.

- Kubernetes - a docker solution backed by Google.

- ECS - a docker solution backed by Amazon.